Since April I've been using an image generating artificial intelligence program called MidJourney. Previously my experience of the cutting edge of AI had been putting a few basic requests into ChatGPT, none of which I found particularly inspiring. I'd discovered MidJourney via a Twitter post from Mary Harrington who was commenting on the "nefarious" atmosphere of some of the purportedly more photorealistic images produced by the AI. The images in question were of groups of attractive young women, seemingly hanging out at a party. The composition was naturalistic, like a candid shot taken with a old Polaroid. Only when I looked closer did the figures begin to lose their air of authenticity. The limbs were not quite in the right orientation, hands were slightly too small or large, and most obviously at least half had missing or extra fingers. There was also something about the expressions on the faces of these digitally created nubiles that was, well, a little off, like the expressions on waxworks but far more fleshy and uncanny.

Harrington was right, there was something nefarious about these pictures, like the photographer had captured a scene never meant to be exposed to public view. Anyway, it was enough to peak my interest and have me sign up for a few minutes CPU time on the MidJourney server, which is accessed via the Discord app (I have no prior experience using Discord, which is apparently mainly used by gamers). I soon came to realise that quirks of anatomy are a common feature, particularly of the earlier versions of MidJourney - which as of this month is up to version 6.

Like all of these image generating AIs it responds to prompts fed in by the user, which can be either text, images or a combination of the two. There are also a variety of commands that can be used to tailor the images and to select basic parameters such as aspect ratio. Once a command is submitted the MidJourney bot - which you are in communication with on Discord, usually responds within 30 seconds or so, presenting you with a grid of four images the differences between which will depend on how you have configured the prompt. For example increasing the '--Chaos' level will add greater stylistic variation, while the '--Stylize' parameter sets the level at which the bot will improvise around your prompt. There are also levers for 'Weird' which I've yet to find a use for, and 'Image weight', which allows you to tweak the degree to which either the image or the text part of a prompt determine the output. There are a lot of other little features but these are ones I tend to use as standard.

So what's the point of this thing? What are people doing with it? Well, generalising somewhat, I'd say there were four main areas or genres of use based on the public gallery on the MidJourney site and images which have garnered popularity on social media:

1 - Fake news or comedy images of celebrities, politicians and other public figures. This is where you got those images of Donald Trump seemingly being dragged by police to jail. Now, this stuff has only really taken off on MidJourney since they've released versions that can actually reproduce a good likeness of the person being punked. Version four was passable but required a lot of coaxing by adding real photos of the individual to the prompt, but things really took off with version 5 and especially 5.2 which can produce excellent likenesses of major political figures without the need to feed it a picture of the person. Version 6 (I've only used this for a few days) appears to be just as good, but with better overall complexity and compositional coherence. It's the potential for misuse - or really any use - of this feature that gets a lot of people worried about the possibility of AI undermining democracy or rendering all news images untrustworthy - as if this wasn't already well on the way.

2 - A picture of your dream girl, often suspiciously young looking, and either anime style Asian or Teutonic blonde babe in appearance . Yes really, I'm not glossing this; it's clear this is what's going on, and why should we be surprised that in the world of OnlyFans and chatbot girlfriends that people immediately gravitate towards using AI in this way, as if the developers at MidJourney had a mainline into the deepest desires of the average extremely online male. But what really bothers me about the trend is how formulaic all the images are. It's all waify (or should that be Waifu?) skinny pixie girls or vivacious models of ambiguous age framed in soft light, staring out vacantly at the sex starved prompter on the other side of the screen. None of these kinds of images are pornographic (more on that side of things later), if anything they're just boring in their cute Eurasian / Aryan predictability. I have no doubt that this is going to be a major growth area in AI development as it so neatly tracks the general state of social and sexual atomisation in the developed world. One could imagine going to your doctor with depression born of loneliness only to be prescribed a fully customisable AI companion. It's cheaper than therapy!

3 - Mainstream entertainment spin off type imagery / fan art. This needs little explanation, and it's perhaps understandable that at a stage in which the people most likely to be using this technology are geeks and the extremely online that a significant proportion of the content will be Star Wars, Marvel, Pixar, Pokémon adjacent type images. So what? it's boring but it's entertainment, and that is what most people will be satisfied with.

4 - Decoration. I'm revealing my Baconian prejudices here, but for me perhaps the most uninspired use of the this vastly powerful technology is in the production of purely decorative images, whether they be for marketing purposes, website design, or just stuff people can use at home. It's dull. End of. But it's also possibly the most "everyday" application image generating AI will be used for. This is where we fear for the graphic designers who face an 'adapt or perish' situation when this stuff goes mainstream. In fact it's already going mainstream with Adobe Firefly which will combine generative AI with Adobe's already wildly successful Photoshop and Illustrator products. So, I don't have a problem with using AI for basic graphic design, but it is for me - as the workaday application - thoroughly pedestrian and only likely to track the mediocrity of contemporary culture.

So what have I used this technology for? What has held my attention over the last nine months and encouraged me to pump about £200 into a monthly subscription and extra CPU time? After the initial novelty wore off, and I realised I couldn't just upload a picture of myself and expect it to produce a perfect likeness in whatever Kabuki drag scenario I chose, I began to explore what you could call its inner aesthetic tendencies. My attitude was straightforward; I wasn't interested in a digitally enhanced version of reality. What interested me was pushing the AI into making images of a world that could not, and perhaps should not exist.

This is where I think the developers of MidJourney show their conservatism and adherence to the basic coordinates of traditional Western thought about aesthetics, since they constantly talk - in their Discord announcements - about 'prompt following' and 'coherence'. What this amounts to is fixing the horizons of the AI to the field of representation. The images the AI is meant to produce should visually represent as closely as possible the verbal and visual data fed into it in the prompt. If you ask for a black cat on a white mat, then that's what it should give you each time, except this is in aesthetic terms a very formal and empty description, which in and of itself could never be said to constitute the salient datum for a unique work of art. Either the same cat on the same mat would be produced each time, which would satisfy very few people, or an infinite number of radically different cats and mats would result, which would show MidJourney to be an engine of arbitrariness and rob the prompt engineer of their status as an "AI artist".

Now, the developers know this, which is why they've put so much effort into MidJourney's ability to understand natural language prompts and in training the AI to interpolate those prompts with its dataset (or supercluster as they call it) in an increasingly coherent and complex way. The results, as you can see with the comparisons between V4 and V6 are startling.

|

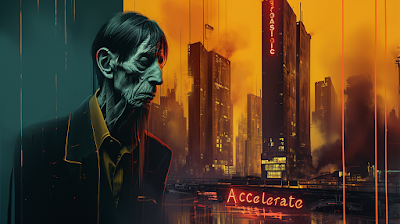

| Left V4 Right V6 - Prompt: Videodrome, surrealism, cybernetics + the same 4 prompt images |

Even so, I wonder what the ultimate end goal is for MidJourney's programmers when it comes to the question of representation? Do they want an tool that if given an essay on the aesthetics of Van Goghs Sunflowers could perfectly reproduce the Dutch masters painting (an idea which makes certain assumptions about art criticism), or do they want an AI that is itself an artist? If it is the former then they would seem doomed to produce endless iterations that track the user's prompts to ever greater degree of representational accuracy, which - since everyone will have a different idea of what that cat on the matt should look like - will never become the reliable tool of representation they might hope for, and will likely never satisfy those users - like myself - that hope AI can offer something uniquely nonhuman to the artistic process.

I don't know if that's what their engineers want, perhaps some, the more commercially minded of their staff, do. After all, MidJourney is usually promoted as a tool for artists, not as an artist in its own right. If this is their intention then what they'll sacrifice, and indeed some versions have already bordered on this, is the wildness and surprising interpretations MidJourney gives to the prompts, and in particular how it interpolates radically incoherent combinations of text and image.

This has been my entry point to MidJourney, the USP without which I doubt I would have stuck around. You could call it dialectical, though that's probably a bit high flung. The attraction is the interplay between intention and randomness, and the feeling that there's something truly uncanny (a ghost in the machine if you like) behind the coding, that is working with you when you use it. This is also what makes it potentially addictive. You craft your prompt, maybe it takes only a minute, maybe it takes ten minutes, or twenty, before you fire it off into the heart of the rough beast. Then there is the delay while the magic happens; compare it to the gap between a dice throw and winning or losing. There is the slow incremental appearance of the grid, and finally the finished result which you scrutinise for hitherto unknown aesthetic qualities - also count the fingers, if there are meant to be fingers, and especially if there are not. It is genuinely addictive and I think even more so when you're trying to produce weird and unconventional images, since half of the fun is trying to sneak something past the moderators in the hope that'll push the result into more interesting territory.

And yes there is moderation, lots of it. Generally speaking you can't pump explicit images into it, or words that are likely to generate explicit images. That is not to say that it doesn't generate explicit images anyway, it really does, and with very little help; I do wonder whether the moderation is aimed more at controlling the inner tendencies of the AI than of the users! I just can't imagine the supercluster training set and coding is able to be thoroughly purged of the raw material that goes into making up a pornographic image. If it knows what Botticelli's Venus looks like then it knows how to generate nude female figures. To exclude all images of nudity would cut it off from a large swath of the cannon of Western art. This is another reason why I find no use for the --weird command. Just adding extra dissonance to the prompt, in either text or image form is enough to throw the result out to odd places and seemingly activate its inner tendencies and interpretive habits.

For example browsing other users pictures I found that the term (Nether Regions) in brackets, which is a kind of soft English slang for genitals (from the idiom of hidden Hellish things), would push any image with organic content into looking, well, not like actual nether regions, but just more gnarly, as if the biological components were suffering from some awful disease. An odd discovery, and only one of many similar counter-intuitive examples of non representational prompting. It doesn't give you what it says on the tin but it does something interesting and fairly consistently.

It's perhaps saying too much that there is a poetic quality to MidJourney, but it is striking the way in which it interpolates the different parts of a prompt, and can turn grammatically or semantically jarring words into coherent compositions. There is then an internal logic perhaps beyond the human ability to make sense from disparate linguistic and visual data. This is perhaps it's greatest strength as an artistic tool and it'll be interesting to see whether the developers are curious enough or sufficiently philosophically minded not to smother MidJourney's wildness in a race for ever greater representational accuracy.

No comments:

Post a Comment